Building Production Grade Microservices with Go and gRPC - A Step-by-Step Developer Guide with Example

Microservices have emerged as a powerful architectural paradigm for developing complex, scalable, and maintainable software systems. By breaking down applications into smaller, loosely coupled services, developers can create a more agile and resilient ecosystem....

My last article on Dev.to garnered some good response, so thought of continuing with this language. Incase you missed that article, you can find it here: Using Golang to Build a Real-Time Notification System - A Step-by-Step Notification System Design Guide

ToC: What is in this article

Introduction What is gRPC? Benefits of Using gRPC for Microservices gRPC vs. REST: A Comparison Getting Started with gRPC in Go Defining gRPC Services with Protocol Buffers Implementing gRPC Services in Go Error Handling and Fault Tolerance in gRPC Performance Optimization in gRPC Microservices Testing and Deployment Best Practices Real-Life Use Cases and Production-Grade Examples Conclusion: Building Production-Grade Microservices with gRPC

1. Introduction to Microservices and gRPC

Microservices have emerged as a powerful architectural paradigm for developing complex, scalable, and maintainable software systems. By breaking down applications into smaller, loosely coupled services, developers can create a more agile and resilient ecosystem. However, managing the communication between these services efficiently is crucial for the success of microservices, and this is where gRPC steps in.

In this section, we'll explore the core concepts of microservices and introduce gRPC, an open-source framework developed by Google that simplifies inter-service communication, making it ideal for building production-grade microservices in Go.

Understanding Microservices

Microservices represent a fundamental shift in how we design, deploy, and maintain software applications. Rather than building monolithic applications, microservices architecture encourages the development of small, independent services that focus on specific business capabilities. These services can be developed, deployed, and scaled independently, providing a level of agility and flexibility that is difficult to achieve with monolithic systems.

The benefits of microservices include:

- Scalability: You can scale individual services as needed, optimizing resource utilization.

- Flexibility: Different services can use different technologies, allowing for the best tool for the job.

- Resilience: Service failures are isolated, minimizing the impact on the entire system.

- Rapid Development: Small, dedicated teams can develop and deploy services more quickly.

- Easier Maintenance: Smaller codebases are easier to maintain and update.

Introduction to gRPC

gRPC, which stands for "Google Remote Procedure Call," is a high-performance, language-agnostic remote procedure call (RPC) framework. It was originally developed by Google and is now open-source. gRPC is designed to simplify the development of efficient and robust microservices by providing a standardized and efficient way for services to communicate with each other.

Key features of gRPC include:

- Efficient Communication: gRPC uses Protocol Buffers (Protobuf) for message serialization, which results in compact and efficient data transmission. It also utilizes HTTP/2 for multiplexing and reducing latency.

- Language Agnostic: gRPC supports multiple programming languages, making it suitable for building polyglot microservices.

- Code Generation: It generates client and server code, reducing the boilerplate code required for communication.

- Streaming: gRPC supports streaming, enabling various communication patterns, including unary (single request, single response), server streaming, client streaming, and bidirectional streaming.

2. Comparing gRPC with REST

In the world of microservices, communication between services is a fundamental aspect of the architecture. Traditionally, Representational State Transfer (REST) has been the go-to choice for building APIs and enabling communication between services. However, gRPC has emerged as a powerful alternative, offering several advantages over REST in specific scenarios.

The REST Paradigm

REST, which stands for Representational State Transfer, is an architectural style that uses the HTTP protocol to communicate between clients and servers. It relies on stateless communication and adheres to a set of principles, such as using uniform resource identifiers (URIs) to identify resources and using HTTP methods (GET, POST, PUT, DELETE) to perform actions on these resources.

While REST has been widely adopted and remains a suitable choice for many applications, it has some limitations in microservices architectures:

- Serialization: REST typically relies on JSON or XML for data serialization. These formats can be inefficient, leading to larger payloads and increased processing time.

- Latency: REST API calls are often synchronous, which can introduce latency when services need to communicate over the network.

- Client-Server Contract: Changes to the API often require updates to both the client and server, which can be cumbersome in a microservices environment.

The gRPC Advantage

gRPC takes a different approach to service communication. It is a high-performance, language-agnostic RPC framework that uses Protocol Buffers (Protobuf) for efficient data serialization and HTTP/2 for transport. This approach offers several key advantages:

- Efficient Data Serialization: gRPC uses Protobuf, which produces compact and efficient messages, reducing the size of payloads and improving performance.

- Multiplexing: HTTP/2, the transport protocol used by gRPC, supports multiplexing, allowing multiple requests and responses to be multiplexed over a single connection. This reduces latency and improves efficiency.

- Code Generation: gRPC automatically generates client and server code from service definitions, reducing the need for manual coding and ensuring strong typing.

- Streaming: gRPC supports various streaming patterns, including server streaming, client streaming, and bidirectional streaming, which are well-suited for real-time communication.

When to Choose gRPC over REST

While both REST and gRPC have their strengths, there are scenarios where gRPC excels:

Low-Latency Requirements: If your microservices require low-latency communication, gRPC's use of HTTP/2 and efficient serialization make it a compelling choice.

Polyglot Environments: In a polyglot microservices environment where services are implemented in different programming languages, gRPC's language-agnostic nature is advantageous.

Efficiency and Compactness: When bandwidth usage and message size efficiency are critical, gRPC's use of Protobuf and multiplexing provides significant benefits.

Streaming and Real-Time Communication: If your microservices require real-time communication or support for streaming data, gRPC's built-in support for streaming is a strong advantage.

It's important to note that the choice between gRPC and REST should be based on the specific requirements of your microservices architecture. In some cases, a combination of both may be the most suitable solution, leveraging the strengths of each communication paradigm where they matter most.

3. Setting Up the Development Environment

Before you dive into building microservices with gRPC and Go, it's essential to set up your development environment correctly. In this section, we'll walk through the necessary steps to prepare your system for microservices development.

1. Install Go

Go, also known as Golang, is the programming language of choice for building gRPC microservices. If you haven't already installed Go, follow these steps to get started:

Download Go: Visit the official Go website (golang.org/dl) and download the Go installer for your operating system.

Install Go: Run the installer and follow the on-screen instructions to install Go on your system. Ensure that Go's bin directory is added to your system's PATH variable.

Verify Installation: Open a terminal and run the following command to verify that Go is correctly installed:

go version

This command should display the installed Go version.

2. Install gRPC Tools

To work with gRPC, you'll need to install the gRPC tools, which include the Protocol Buffers (Protobuf) compiler and the Go plugin. Follow these steps to install the gRPC tools:

Install Protobuf Compiler: Depending on your operating system, download the Protobuf compiler from the official repository (github.com/protocolbuffers/protobuf/releases).

Install the Go Protobuf Plugin: Use Go's package manager,

go get, to install the Go Protobuf plugin:

go get google.golang.org/protobuf/cmd/protoc-gen-go

- Install gRPC Tools: Install the gRPC tools, including the protoc-gen-go-grpc plugin, which is used for generating gRPC code:

go get google.golang.org/grpc/cmd/protoc-gen-go-grpc

3. Verify gRPC Tools Installation

To ensure that the gRPC tools are correctly installed, run the following command:

protoc-gen-go-grpc --version

This command should display the installed version of the gRPC tools.

4. Set Up a Workspace

Create a workspace directory for your microservices project. This directory will house your Go source code and Protobuf files. Organizing your code in a workspace is a best practice for Go development.

5. Get Started with Your Editor

Select an integrated development environment (IDE) or code editor that you are comfortable with and supports Go development. Popular choices include Visual Studio Code with Go extensions, GoLand, and others. Ensure that your chosen editor is properly configured for Go development.

With your development environment set up, you're ready to start building microservices with gRPC and Go. Great, let's proceed to the next section:

3. Setting Up the Development Environment

Before you dive into building microservices with gRPC and Go, it's essential to set up your development environment correctly. In this section, we'll walk through the necessary steps to prepare your system for microservices development.

1. Install Go

Go, also known as Golang, is the programming language of choice for building gRPC microservices. If you haven't already installed Go, follow these steps to get started:

Download Go: Visit the official Go website (golang.org/dl) and download the Go installer for your operating system.

Install Go: Run the installer and follow the on-screen instructions to install Go on your system. Ensure that Go's bin directory is added to your system's PATH variable.

Verify Installation: Open a terminal and run the following command to verify that Go is correctly installed:

go version

This command should display the installed Go version.

2. Install gRPC Tools

To work with gRPC, you'll need to install the gRPC tools, which include the Protocol Buffers (Protobuf) compiler and the Go plugin. Follow these steps to install the gRPC tools:

Install Protobuf Compiler: Depending on your operating system, download the Protobuf compiler from the official repository (github.com/protocolbuffers/protobuf/releases).

Install the Go Protobuf Plugin: Use Go's package manager,

go get, to install the Go Protobuf plugin:

go get google.golang.org/protobuf/cmd/protoc-gen-go

- Install gRPC Tools: Install the gRPC tools, including the protoc-gen-go-grpc plugin, which is used for generating gRPC code:

go get google.golang.org/grpc/cmd/protoc-gen-go-grpc

3. Verify gRPC Tools Installation

To ensure that the gRPC tools are correctly installed, run the following command:

protoc-gen-go-grpc --version

This command should display the installed version of the gRPC tools.

4. Set Up a Workspace

Create a workspace directory for your microservices project. This directory will house your Go source code and Protobuf files. Organizing your code in a workspace is a best practice for Go development.

5. Get Started with Your Editor

Select an integrated development environment (IDE) or code editor that you are comfortable with and supports Go development. Popular choices include Visual Studio Code with Go extensions, GoLand, and others. Ensure that your chosen editor is properly configured for Go development.

4. Creating Your First gRPC Service

Now that your development environment is set up, it's time to dive into creating your first gRPC service in Go. In this section, we'll guide you through the process, from defining service methods to generating the necessary code.

1. Define Your Service

Begin by defining your gRPC service. gRPC services are defined using Protocol Buffers (Protobuf) in a .proto file. This file specifies the service methods and the message types that the service will use. Here's an example of a simple .proto file:

syntax = "proto3";

package helloworld;

service Greeter {

rpc SayHello (HelloRequest) returns (HelloReply);

}

message HelloRequest {

string name = 1;

}

message HelloReply {

string message = 1;

}

In this example, we define a Greeter service with a single method, SayHello, which takes a HelloRequest message as input and returns a HelloReply message.

2. Generate Code

To create the server and client code for your gRPC service from the .proto file, use the protoc compiler with the appropriate plugins. Here's how to generate the Go code:

protoc --go_out=. --go-grpc_out=. path/to/your/protofile.proto

Replace path/to/your/protofile.proto with the actual path to your .proto file.

This command generates the Go code for your service in the current directory.

3. Implement the Server

Now, it's time to implement the server. Here's a basic example of a gRPC server in Go:

package main

import (

"context"

"log"

"net"

"google.golang.org/grpc"

pb "yourmodule/yourprotofile"

)

type server struct{}

func (s *server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) {

return &pb.HelloReply{Message: "Hello, " + in.Name}, nil

}

func main() {

lis, err := net.Listen("tcp", ":50051")

if err != nil {

log.Fatalf("Failed to listen: %v", err)

}

s := grpc.NewServer()

pb.RegisterGreeterServer(s, &server{})

log.Println("Server started on :50051")

if err := s.Serve(lis); err != nil {

log.Fatalf("Failed to serve: %v", err)

}

}

In this code, we define a server struct that implements the Greeter service. The SayHello method processes incoming requests and returns a response.

4. Implement the Client

To create a client that can communicate with your gRPC service, you'll need to write Go code to interact with the service's API. Here's a simple client example:

package main

import (

"context"

"log"

"os"

"time"

"google.golang.org/grpc"

pb "yourmodule/yourprotofile"

)

const (

address = "localhost:50051"

defaultName = "world"

)

func main() {

conn, err := grpc.Dial(address, grpc.WithInsecure())

if err != nil {

log.Fatalf("Did not connect: %v", err)

}

defer conn.Close()

c := pb.NewGreeterClient(conn)

name := defaultName

if len(os.Args) > 1 {

name = os.Args[1]

}

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

r, err := c.SayHello(ctx, &pb.HelloRequest{Name: name})

if err != nil {

log.Fatalf("Could not greet: %v", err)

}

log.Printf("Greeting: %s", r.Message)

}

This client code creates a connection to the gRPC server, makes a request to the SayHello method, and prints the response.

5. Run Your gRPC Service

To run your gRPC service, execute the server code. Make sure the server is running before you run the client.

With this simple example, you've successfully created a gRPC service in Go, implemented a server, and connected to it with a client.

Before continuing, one important point. Similar to this knowledge bomb, I run a developer-centric community on Slack. Where we discuss these kinds of topics, implementations, integrations, some truth bombs, weird chats, virtual meets, and everything that will help a developer remain sane ;) Afterall, too much knowledge can be dangerous too.

I'm inviting you to join our free community, take part in discussions, and share your freaking experience & expertise. You can fill out this form, and a Slack invite will ring your email in a few days. We have amazing folks from some of the great companies, and you wouldn't wanna miss interacting with them. Invite Form

And I would be highly obliged if you can share that form with your dev friends, who are givers.

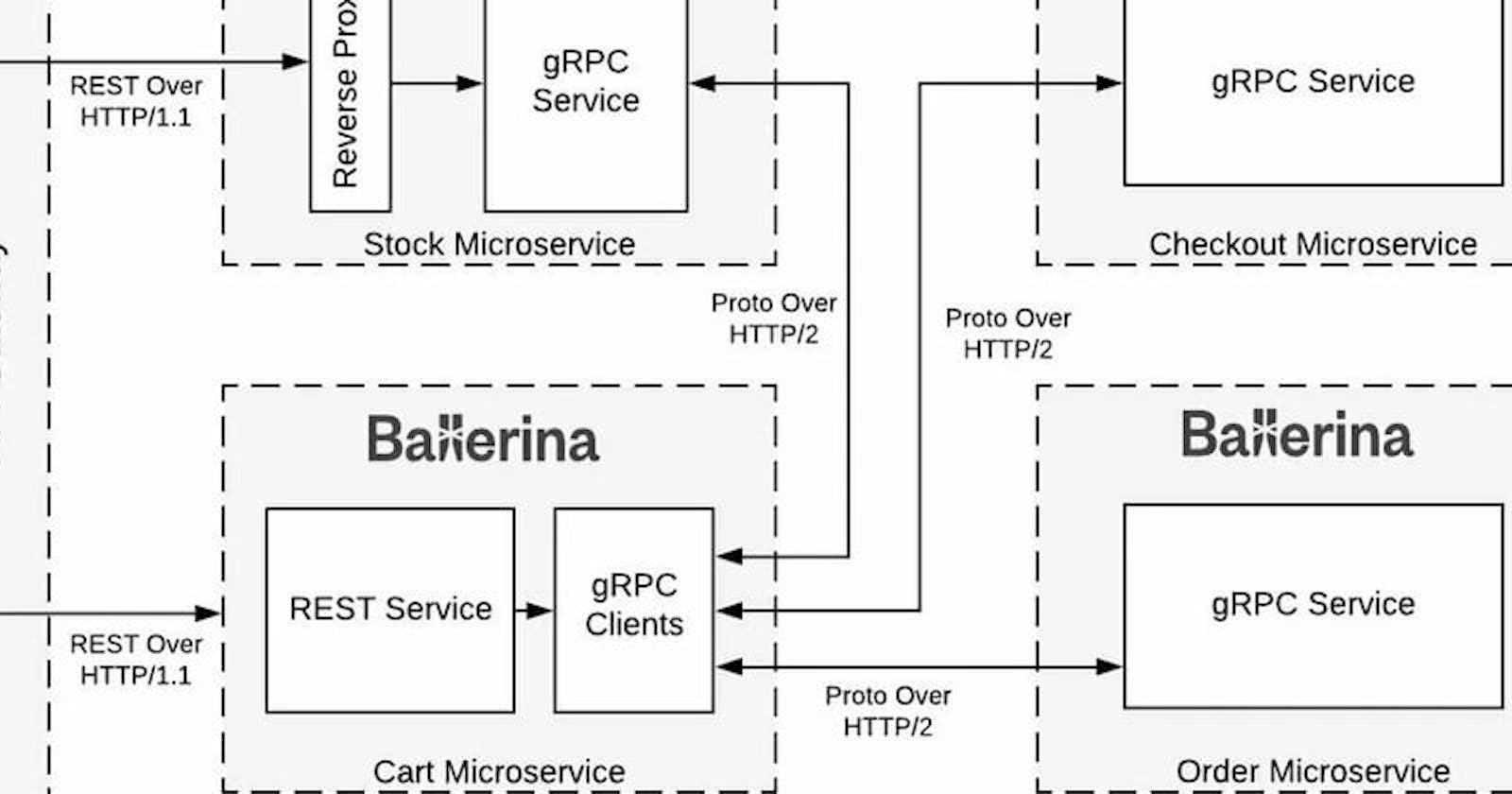

5. Microservices Communication with gRPC

One of the strengths of gRPC is its support for various communication patterns, which are essential for building efficient microservices. In this section, we'll dive into the different communication patterns offered by gRPC and how to use them effectively in your microservices architecture.

1. Unary RPC

Unary RPC is the simplest form of gRPC communication. In a unary RPC, the client sends a single request to the server and waits for a single response. This pattern is similar to traditional request-response communication in REST.

Here's an example of defining a unary RPC method in a .proto file:

service MyService {

rpc GetResource (ResourceRequest) returns (ResourceResponse);

}

message ResourceRequest {

string resource_id = 1;

}

message ResourceResponse {

string data = 1;

}

2. Server Streaming RPC

In server streaming RPC, the client sends a single request to the server and receives a stream of responses. This pattern is useful when the server needs to push multiple pieces of data to the client. For instance, in a chat application, the server can continuously stream messages to the client.

Here's an example of defining a server streaming RPC method:

service ChatService {

rpc StreamMessages (MessageRequest) returns (stream MessageResponse);

}

message MessageRequest {

string user_id = 1;

}

message MessageResponse {

string message = 1;

}

3. Client Streaming RPC

Client streaming RPC is the reverse of server streaming. In this pattern, the client sends a stream of requests to the server and receives a single response. This can be useful in scenarios where the client needs to upload a large amount of data to the server, such as uploading a file.

Here's an example of defining a client streaming RPC method:

service FileUploadService {

rpc UploadFile (stream FileRequest) returns (FileResponse);

}

message FileRequest {

bytes data_chunk = 1;

}

message FileResponse {

string status = 1;

}

4. Bidirectional Streaming RPC

Bidirectional streaming RPC is the most flexible communication pattern. In this pattern, both the client and server can send a stream of messages to each other simultaneously. This is ideal for real-time applications where both parties need to exchange data continuously.

Here's an example of defining a bidirectional streaming RPC method:

service ChatService {

rpc Chat (stream ChatRequest) returns (stream ChatResponse);

}

message ChatRequest {

string message = 1;

}

message ChatResponse {

string reply = 1;

}

5. Implementing Communication Patterns

To implement these communication patterns in your gRPC service, you'll need to define the methods in your service's Go code and handle the logic for processing requests and sending responses. You can use the generated client and server code from your Protobuf definitions.

Here's a simplified example of implementing a server streaming RPC in Go:

func (s *server) StreamMessages(req *pb.MessageRequest, stream pb.ChatService_StreamMessagesServer) error {

// Simulate streaming by sending messages to the client

for i := 0; i < 5; i++ {

response := &pb.MessageResponse{Message: fmt.Sprintf("Message %d", i)}

if err := stream.Send(response); err != nil {

return err

}

}

return nil

}

6. Real-World Use Cases

Each of these communication patterns has its use cases in real-world microservices scenarios. For example:

- Unary RPC: Used for simple request-response operations.

- Server Streaming RPC: Suitable for sending real-time updates, such as stock market data or chat messages.

- Client Streaming RPC: Useful for uploading large files or aggregating data from multiple sources.

- Bidirectional Streaming RPC: Ideal for interactive applications where both client and server need to send data in real time.

Understanding and applying these communication patterns is essential for building efficient and responsive microservices.

6. Building Secure gRPC Microservices

Security is a paramount concern in microservices architecture. Protecting your services from unauthorized access and ensuring data privacy are essential. In this section, we'll explore the security aspects of gRPC and how to build secure microservices.

1. Authentication and Authorization

gRPC provides built-in support for authentication and authorization. Here's how you can implement these security features:

Authentication: gRPC supports various authentication methods, including token-based authentication, SSL/TLS, and more. Choose the authentication method that suits your needs and ensure that your microservices are accessible only to authenticated clients.

Authorization: Use role-based access control (RBAC) or other authorization mechanisms to define who can access specific gRPC methods. By enforcing authorization policies, you can control which clients are allowed to perform certain actions.

2. Transport Layer Security (TLS)

Securing the communication between gRPC services is crucial. To achieve this, gRPC relies on the Transport Layer Security (TLS) protocol. TLS ensures that data exchanged between services is encrypted and remains confidential.

You can enable TLS for your gRPC server by providing TLS certificates and configuring your server to use them. Clients can then securely connect to your gRPC services over HTTPS.

3. Service Identity

In a microservices architecture, verifying the identity of the services you're communicating with is vital. By using certificates and keys, you can establish the identity of your services and ensure that clients are connecting to the right services.

Implement mutual TLS (mTLS) authentication to verify the identity of both the client and the server. This adds an extra layer of security by ensuring that the client and server can trust each other's identity.

4. Rate Limiting and Quotas

To prevent abuse and ensure fair resource usage, consider implementing rate limiting and quotas for your gRPC services. Rate limiting controls the number of requests a client can make within a specific time frame, while quotas restrict the total amount of resources a client can consume.

By enforcing rate limiting and quotas, you can protect your services from being overwhelmed and maintain fair resource allocation.

5. Service Discovery and Load Balancing

Security should also extend to service discovery and load balancing. Ensure that your service discovery mechanisms and load balancers are protected from unauthorized access. Implement security policies to restrict access to these critical components.

6. Logging and Monitoring

Effective logging and monitoring are essential for identifying and responding to security threats and anomalies. Use tools like Prometheus, Grafana, and centralized logging systems to monitor the health and security of your microservices. Set up alerts and alarms to detect suspicious activities.

7. Security Best Practices

Follow these security best practices when building secure gRPC microservices:

Regularly update dependencies, including gRPC libraries, to patch security vulnerabilities.

Implement strong password policies and access controls for any databases or external services your microservices interact with.

Conduct security audits and penetration testing to identify and address vulnerabilities.

Educate your development and operations teams about security best practices and the latest threats.

Plan for incident response and disaster recovery to minimize the impact of security breaches.

Security is an ongoing process, and it's crucial to stay vigilant and adapt to new security threats as they emerge.

7. Error Handling and Fault Tolerance

Error Handling and Fault Tolerance

Error handling and fault tolerance are critical aspects of building robust microservices. In this section, we'll delve into the importance of effective error handling and strategies to ensure fault tolerance in your gRPC-based microservices.

1. Understanding Error Handling

In microservices architecture, errors can occur at any level, from network issues to service-specific problems. Effective error handling is essential to maintain the reliability of your services.

Use gRPC Status Codes: gRPC defines a set of status codes to indicate the result of a call. These status codes provide a standardized way to communicate errors between clients and servers. Common status codes include

OK,NotFound,PermissionDenied, andUnavailable.Provide Descriptive Error Messages: Along with status codes, include descriptive error messages that can help developers diagnose issues. Clear error messages facilitate troubleshooting and debugging.

2. Implementing Retry Policies

Retry policies can help your microservices recover from transient errors. When a request fails due to network issues or temporary service unavailability, the client can retry the operation. Here's how to implement retry policies:

Exponential Backoff: Use exponential backoff to progressively increase the time between retries. This approach helps prevent overwhelming the service with too many retry attempts.

Retry Limits: Set a reasonable limit on the number of retry attempts to avoid excessive resource consumption.

3. Circuit Breakers

Circuit breakers are a mechanism to prevent overloading a service that is already experiencing issues. When a service detects a high rate of errors, it "opens" the circuit breaker, temporarily blocking requests. This prevents cascading failures and gives the service time to recover. If the error rate decreases, the circuit breaker can "close" and allow requests to flow again.

4. Rate Limiting

Rate limiting restricts the number of requests a client can make in a given time frame. Implementing rate limiting is a proactive way to prevent a single client from overwhelming your microservices with excessive requests, which could lead to performance degradation or denial of service.

5. Graceful Degradation

Plan for graceful degradation when building microservices. This means that even if a specific service is unavailable or experiencing issues, your application can continue to provide limited functionality. For example, if a product recommendation service is down, your e-commerce application can still display product details and allow users to make purchases.

6. Observability and Monitoring

Implement comprehensive observability and monitoring for your microservices to detect and respond to issues in real time. Use tools like Prometheus, Grafana, and distributed tracing systems to gain insights into the health and performance of your services.

7. Automated Testing

Automated testing, including unit tests, integration tests, and load testing, is crucial to validate the reliability and fault tolerance of your microservices. Identify and address potential failure scenarios during testing.

8. Graceful Shutdown

Implement graceful shutdown procedures for your microservices. When a service needs to be taken offline for maintenance or scaling, ensure that it can complete any in-flight requests and connections before shutting down.

9. Distributed Tracing

Distributed tracing helps you track the flow of a request through multiple microservices. It is invaluable for diagnosing performance issues and understanding the interactions between services.

Effective error handling and fault tolerance are integral to building production-grade microservices. By applying these strategies, you can ensure that your services can gracefully handle various failure scenarios and continue to provide reliable functionality.

8. Performance Optimization in gRPC Microservices

Optimizing the performance of your gRPC microservices is crucial to ensure responsiveness and efficiency. In this section, we'll delve into various performance optimization techniques and best practices.

1. Load Balancing

Load balancing is essential to distribute incoming requests across multiple instances of a service, ensuring that no single instance is overwhelmed. To optimize performance:

Use Load Balancers: Employ load balancers such as NGINX, HAProxy, or cloud-based load balancers to evenly distribute traffic to your gRPC services.

Implement Client-Side Load Balancing: In addition to server-side load balancing, gRPC supports client-side load balancing, allowing clients to distribute requests intelligently.

2. Connection Pooling

Connection pooling helps manage a pool of connections to gRPC services efficiently. Rather than opening a new connection for every request, you can reuse existing connections, reducing latency and resource overhead.

3. Payload Compression

Compressing the payload of gRPC messages can significantly improve performance by reducing the amount of data transferred over the network. gRPC supports payload compression using various codecs, including Gzip and Deflate.

4. Protocol Buffers

Protocol Buffers (Protobuf) is the default serialization format for gRPC. Protobuf is efficient in terms of both speed and size, making it a performance-optimized choice for message serialization.

5. Caching

Implement caching strategies to reduce the need to repeatedly request the same data from your services. Cache frequently used data at the client or server side to minimize response times.

6. Connection Keep-Alive

To avoid the overhead of opening new connections for every request, enable connection keep-alive. This maintains open connections for a period, allowing multiple requests to use the same connection.

7. Use HTTP/2

gRPC leverages HTTP/2, which provides benefits such as multiplexing, header compression, and flow control. These features contribute to a more efficient and responsive communication protocol.

8. Profiling and Optimization

Regularly profile your gRPC services to identify performance bottlenecks. Tools like pprof can help you understand the performance characteristics of your application. Optimize critical code paths based on profiling data.

9. Service Level Agreements (SLAs)

Define and adhere to service level agreements for your microservices. SLAs set expectations for response times and availability, allowing you to measure performance against agreed-upon criteria.

10. Testing and Benchmarking

Conduct thorough performance testing and benchmarking to assess the behavior of your microservices under different load conditions. This helps you identify performance bottlenecks and optimize your services accordingly.

11. Distributed Caching

For microservices that rely on frequently accessed data, consider using distributed caching systems like Redis to store and retrieve data more quickly. Distributed caching can significantly reduce the load on your backend services.

12. Containerization and Orchestration

Containerization platforms like Docker and orchestration systems like Kubernetes can enhance performance by efficiently managing the deployment and scaling of microservices.

13. Content Delivery Networks (CDNs)

Leverage CDNs to cache and deliver static assets closer to end-users, reducing the load on your microservices. CDNs can improve response times and reduce the load on your infrastructure.

By applying these performance optimization strategies, you can ensure that your gRPC-based microservices are highly responsive and efficient, providing an optimal experience for your users.

9. Testing and Deployment Best Practices

In the world of microservices, testing and deployment are crucial aspects of ensuring that your services are reliable, maintainable, and scalable. In this section, we'll explore best practices for testing and deploying gRPC-based microservices.

1. Unit Testing

Unit testing is the foundation of quality assurance in microservices. Test each individual component of your microservice in isolation. For gRPC services, this means testing individual service methods to ensure they produce the correct output for a given input.

2. Integration Testing

Integration testing focuses on the interactions between microservices. In the context of gRPC, it's essential to test how your services communicate and collaborate. This involves testing the end-to-end flow of requests and responses between services.

3. Mocking Dependencies

Use mocking frameworks to simulate external dependencies, such as databases or third-party services, during testing. This allows you to isolate the component you're testing and ensure that it functions correctly without relying on external factors.

4. Continuous Integration (CI)

Implement continuous integration pipelines that automatically build, test, and verify your microservices with each code change. CI pipelines ensure that code changes don't introduce regressions and help maintain a stable codebase.

5. Continuous Deployment (CD)

Continuous deployment automates the process of releasing your microservices to production. When combined with CI, it allows you to automatically deploy changes to your services once they pass all tests. However, consider the importance of rigorous testing in the CD pipeline to avoid introducing bugs into your production environment.

6. Canary Releases

Canary releases involve gradually rolling out new versions of your microservices to a subset of users. This approach allows you to monitor the behavior of the new release in a controlled environment before fully deploying it to all users.

7. A/B Testing

A/B testing is a method for comparing two versions of a service to determine which one performs better. In the context of microservices, you can route a portion of your traffic to different service versions to assess their performance and user satisfaction.

8. Blue-Green Deployments

In blue-green deployments, you maintain two separate environments, one with the current version (blue) and one with the new version (green). To update your service, you switch traffic from the blue environment to the green one. This approach enables quick rollbacks if issues arise.

9. Observability and Monitoring

Monitoring is an integral part of both testing and deployment. Implement comprehensive observability tools to monitor the health and performance of your microservices. Set up alerts and dashboards to detect issues in real time.

10. Disaster Recovery and Rollback Plans

Plan for disaster recovery by defining strategies for handling service outages and unexpected issues. Establish rollback plans to revert to a previous version in case a new release causes problems.

11. Deployment Automation

Automate your deployment process to minimize human error and ensure consistent and reliable deployments. Tools like Kubernetes can simplify the orchestration of microservice deployments.

12. Zero-Downtime Deployments

Strive for zero-downtime deployments by ensuring that new versions of your services are available and functioning before old versions are deprecated. This minimizes disruption for users.

13. Security Testing

Incorporate security testing into your CI/CD pipeline. Tools like static code analyzers and vulnerability scanners can help identify potential security issues before they reach production.

14. Chaos Engineering

Consider implementing chaos engineering practices to proactively test the resilience of your microservices. By intentionally injecting failures into your system, you can identify vulnerabilities and improve fault tolerance.

Effective testing and deployment practices are essential for maintaining the reliability and stability of your gRPC-based microservices. By following these best practices, you can confidently release and manage your services in production.

10. Real-Life Use Cases and Production-Grade Examples

Now that we've covered the essential aspects of building gRPC microservices, let's dive into real-life use cases and production-grade examples of how gRPC is employed in various industries.

1. E-Commerce: Order Processing

Imagine an e-commerce platform that relies on microservices for order processing. Each microservice handles a specific aspect of the order lifecycle, such as inventory management, payment processing, and shipping coordination. These microservices communicate via gRPC, ensuring fast and reliable order processing. Here's an example of how the gRPC definition might look for the payment service:

syntax = "proto3";

package ecommerce;

service PaymentService {

rpc ProcessPayment (PaymentRequest) returns (PaymentResponse);

}

message PaymentRequest {

string order_id = 1;

double amount = 2;

string payment_method = 3;

}

message PaymentResponse {

bool success = 1;

string message = 2;

}

2. Healthcare: Telemedicine

In the healthcare industry, gRPC plays a crucial role in telemedicine applications. Microservices enable features like real-time video consultations, appointment scheduling, and secure data exchange. The gRPC-based communication ensures low latency and reliable data transfer.

3. Finance: Fraud Detection

Financial institutions use gRPC microservices to enhance fraud detection and prevention. These services process millions of transactions per second and rely on gRPC's performance and security features. Here's a simplified example of a fraud detection service:

syntax = "proto3";

package finance;

service FraudDetectionService {

rpc CheckTransaction (TransactionRequest) returns (TransactionResult);

}

message TransactionRequest {

string transaction_id = 1;

string account_id = 2;

double amount = 3;

}

message TransactionResult {

bool is_fraud = 1;

string reason = 2;

}

4. Travel: Booking and Reservations

Travel booking platforms use gRPC microservices to provide real-time booking and reservation services. Services for hotel reservations, flight bookings, and car rentals communicate efficiently using gRPC, ensuring that users receive up-to-date information and can make bookings seamlessly.

5. Gaming: Multiplayer Games

The gaming industry leverages gRPC for building scalable and responsive multiplayer games. Real-time communication, player interactions, and game state synchronization are achieved through gRPC-powered microservices.

6. IoT: Smart Home Automation

In the Internet of Things (IoT) domain, gRPC microservices enable smart home automation. Devices such as thermostats, lights, and security cameras communicate with a central controller using gRPC, allowing homeowners to control and monitor their homes remotely.

7. Social Media: Activity Feeds

Social media platforms use gRPC to create activity feeds, where users can see real-time updates from their friends. Microservices handle the distribution and aggregation of feed data, ensuring a responsive user experience.

The code examples provided above are simplified representations of the gRPC service definitions used in these industries. In production-grade scenarios, these services would include additional features, authentication, security measures, and extensive testing.

Real-life use cases demonstrate the versatility of gRPC and its ability to meet the communication needs of a wide range of applications and industries. By utilizing gRPC, businesses can create high-performance, scalable, and reliable microservices tailored to their specific requirements.

11. Conclusion: Building Production-Grade Microservices with gRPC

Conclusion: Building Production-Grade Microservices with gRPC

In this comprehensive article, we've explored the world of building production-grade microservices with gRPC. Let's summarize the key takeaways and emphasize the importance of adopting gRPC for microservices development.

Key Takeaways

Efficiency and Performance: gRPC offers efficient and high-performance communication between microservices, making it a top choice for building scalable and responsive applications.

Language-agnostic: gRPC's support for multiple programming languages enables developers to use the language they are most comfortable with while building microservices.

Protocol Buffers (Protobuf): The use of Protobuf for message serialization ensures efficient data transfer between services, reducing both latency and network load.

Service Definitions: gRPC service definitions provide a contract for service interactions, enabling clients and servers to communicate seamlessly.

Error Handling and Fault Tolerance: Implement effective error handling, retry policies, circuit breakers, and other fault tolerance mechanisms to ensure service reliability.

Performance Optimization: Techniques such as load balancing, connection pooling, and payload compression are essential for optimizing the performance of your microservices.

Testing and Deployment: Rigorous testing, continuous integration, and automated deployment pipelines are vital for maintaining stable and reliable microservices.

Real-Life Use Cases: gRPC is applied in various industries, including e-commerce, healthcare, finance, travel, gaming, IoT, and social media, to build efficient and responsive microservices.

Why gRPC Matters for Microservices

Scalability: gRPC's efficiency and performance characteristics make it an excellent choice for microservices that need to scale rapidly and handle a large number of requests.

Reliability: The use of Protobuf, service definitions, and comprehensive testing ensures that gRPC microservices are reliable and robust, even in complex distributed systems.

Interoperability: gRPC's support for multiple programming languages and its language-agnostic nature make it a practical choice for diverse development teams.

Security: With built-in support for authentication and encryption, gRPC helps protect the integrity and confidentiality of data in microservices.

Ecosystem: gRPC has a thriving ecosystem with tools, libraries, and community support, making it a well-supported choice for microservices development.

In Closing

That's all for this, my friends. See you later!

Similar to this knowledge bomb, I along with other open-source loving dev folks, run a developer-centric community on Slack. Where we discuss these kinds of topics, implementations, integrations, some truth bombs, weird chats, virtual meets, contribute to open--sources and everything that will help a developer remain sane ;) Afterall, too much knowledge can be dangerous too.

I'm inviting you to join our free community (no ads, I promise, and I intend to keep it that way), take part in discussions, and share your freaking experience & expertise. You can fill out this form, and a Slack invite will ring your email in a few days. We have amazing folks from some of the great companies (Atlassian, Gong, Scaler), and you wouldn't wanna miss interacting with them. Invite Form

And I would be highly obliged if you can share that form with your dev friends, who are givers.